Bias is good

November 15, 2024

Excerpt: If you haven’t heard of System 1 and System 2, you’ve probably heard one of its analogues. People who say ‘don’t let your amygdala hijack your frontal lobes’, or ‘get out of the sympathetic and into the parasympathetic nervous system’, or ‘something something vagus nerve’ are using pseudo-brain science to get at the same thing. But the thing everyone seems to have taken away from this book is the thing we always take away—System 1 stuff, a.k.a. bias is a bad thing. This is not what Kahneman was going for. Kahneman was trying to show us how both System 1 and System 2 have their place.

Ideology

Bias reduces noise—if you know roughly what to expect, then being biased by those expectations means you won’t get distracted by less relevant data points.

Table of Contents

filed under:

Article Status: Complete (for now).

I complain alot around here about about ways people think about thinking that I think are silly. Mostly because it’s both fun and easy to criticise other people’s work, and I like to lean into fun and easy.

But, really, this site is all about challenging ideologies to see which ones are worth hanging on to. So probably I need to sometimes talk about ways of thinking that I think aren’t silly.

And one way of thinking I think isn’t silly is biased thinking.

Bias improves measurement

In a previous article I somewhat confusedly alluded to the fact that injecting bias results in fewer errors, both in our decision-making and in our statistical analyses. I want to explain that a bit better here. First, though, we need to visit a first year psychology class.

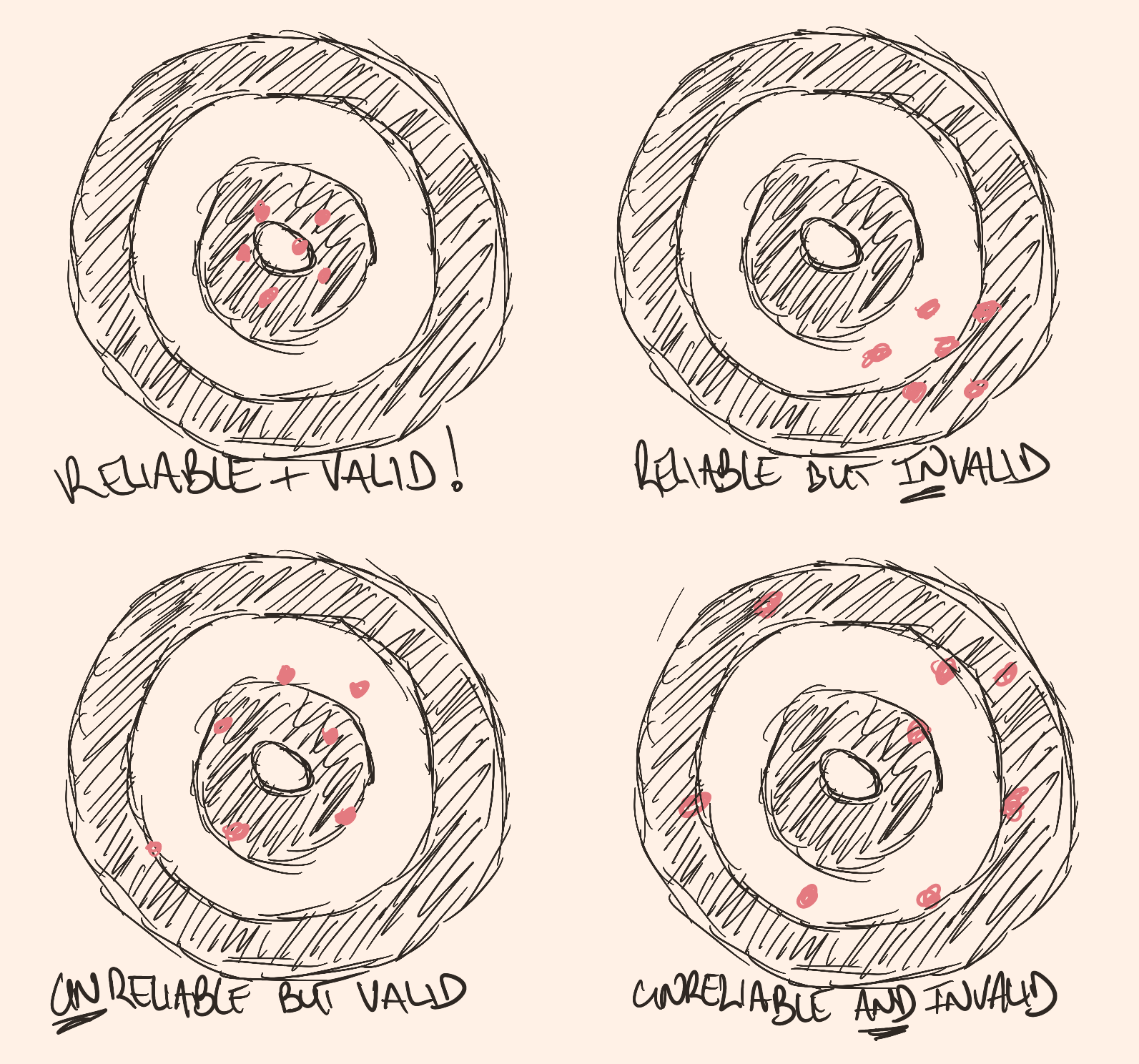

First year psychology students’ only critique of any journal article will be about its reliability and validity. They love these terms. I will teach them to you, so you can also feel as clever as we all once did, and then I’ll make my point.

You see, psychology measures should be both valid and reliable. Validity is where the thing measures what it’s supposed to be measuring and not something else—its accuracy, I guess. Reliability, on the other hand, refers to the consistency of a measure—something that’s reliable will give you the same results if you measure the same thing more than once. There’s this classic ‘target’ picture we use to illustrate this:

Reliable valid measures hit close to the target all the time. Reliable invalid measures hit off-target, but in the same place each time. Unreliable valid measures hit roughly near the target, but are off by random amounts. Unreliable and invalid measures just hit randomly.

So, something that’s both reliable and valid would measure the right thing consistently. A good example of this is a working clock. It’s measuring time, and if it’s set to the right time, then every time you look at it, it reliably gives you the right time.

Something that’s reliable but invalid would be a stopped clock. This is perfectly reliable—it’ll give you the exact same answer every time you look. But you have that old saying: ‘a broken clock is right twice a day’—not particularly useful as a measurement, because it’s not measuring time any more.

We could probably stretch our broken clock metaphor to unreliably valid measures. If your watch is slow, or fast, or erratic, it’s still kind of tracking time, but very badly. My watch gains about five minutes a day, depending on how wound it is. It’s roughly aligned to the time, but I can’t rely on it.

And then something that’s unreliable and invalid is just random, basically. It’s hard to stretch our clock metaphor to this, but maybe something like the time on your microwave. If you often switch it off at the powerpoint—switching the plug for the kettle or something—then whatever time it’s showing is relative to nothing in particular.1 It’s not reliable or valid.

So, say, you’re measuring someone’s personality. You want their answers to your survey to be roughly the same, survey to survey—reliable—or else everyone’s wasting their time. The whole point of measuring personality is that it’s stable, and it sort of predicts behaviour. If it changed from day to day, it wouldn’t be interesting at all.

Similarly, you want your survey questions to be asking questions about personality, and not something else. Like, are they grumpy because they’re not very agreeable as a person, or are they grumpy because they’re just hungry right now?2 You want it to be a valid measure.

Now you, like all first year psych students, have been introduced to some of the most basic principles of critical scientific thinking, and I can make my point.

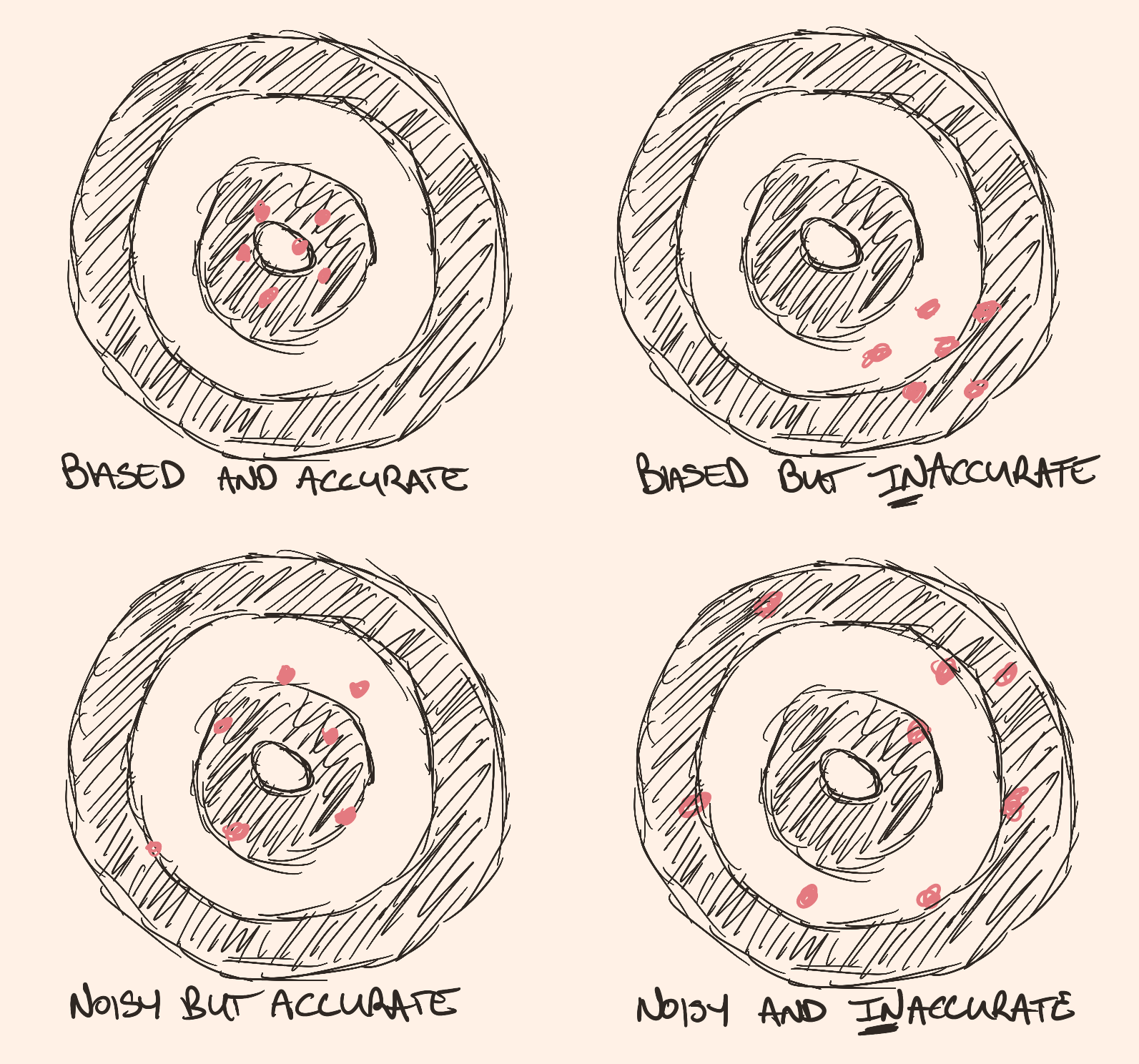

One of the main issues with psychological research is that it’s hard to measure abstract psychological constructs. They’re noisy. Just like my example above, how we behave is influenced by whether we’re hungry and all sorts of other nonsense. So our measures are often unreliable. This is where bias comes in to help:

Biased measures are the same as reliable measures. They can be valid or

invalid, but they cluster together. Noisy measures are the same as

unreliable ones---they too can be valid or invalid, but they don't cluster

together---they're noisy.

Biased measures are the same as reliable measures. They can be valid or

invalid, but they cluster together. Noisy measures are the same as

unreliable ones---they too can be valid or invalid, but they don't cluster

together---they're noisy.

You see, bias is a way of reducing noise. If you know roughly what to expect, then you won’t get distracted by less relevant data points. Obviously, bias is bad when you don’t know what to expect, or when what you expect is wrong. And this is what people are normally worried about when they invoke the concept of bias. But, since the world is very noisy, we usually need bias:

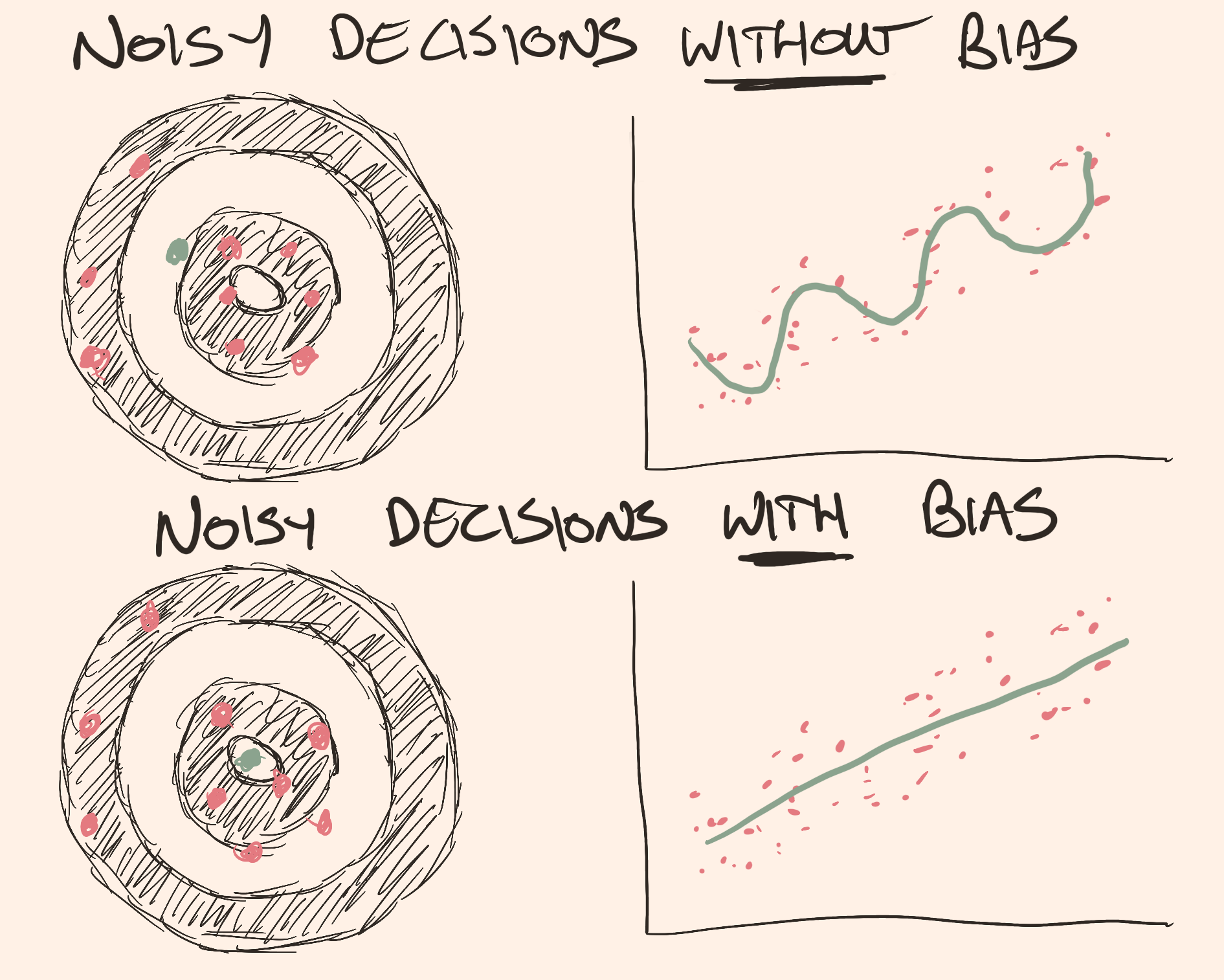

If we return to our dartboards, we have some results that are near

on-target, but a couple are way off. Without bias---the expectation that

we're on target---then we'd assume our measure is off, pulled off-target by

the couple outliers. With bias, we ignore the outliers, and we notice that

we're mostly on-target. Or, for a more familiar example, with a noisy

scatterplot, without bias we might pay attention to every data point and

draw a wiggly line to connect them all. With bias, we ignore the noise and

notice that a straight line better describes the datapoints.

If we return to our dartboards, we have some results that are near

on-target, but a couple are way off. Without bias---the expectation that

we're on target---then we'd assume our measure is off, pulled off-target by

the couple outliers. With bias, we ignore the outliers, and we notice that

we're mostly on-target. Or, for a more familiar example, with a noisy

scatterplot, without bias we might pay attention to every data point and

draw a wiggly line to connect them all. With bias, we ignore the noise and

notice that a straight line better describes the datapoints.

So I want to give you some examples of when bias is obviously useful. And rather than illustrate with my usual ‘ideologies are what brains do’ thing, since I do that plenty, I’ll stay in the behavioural economist lane.

System 1 and System 2 a.k.a. be rational a.k.a. get out of the amygdala a.k.a. get into the parasympathetic etc

I’d be surprised if you hadn’t come across System 1 and System 2 before. Kahneman’s 2011 book is probably one of the most popular pop-psych books ever, and for good reason. But I’ll reprise it in a couple paragraphs for those who haven’t had the pleasure, before showing you how most people get it wrong.

Kahneman, alongside his colleague Amos Tversky,3 birthed the field of behavioural economics and the subject of bias with their project on ‘cognitive illusions’ in the 60’s. In this book, fifty-odd years later, Kahneman gives us a nice overarching narrative about how this works. We have:

- System 1: a black box that describes all our cognitive processes that are fast, instinctive or unconscious, and emotional.

- System 2: a black box that describes all our cognitive processes that are slow, effortful or conscious, and more deliberative. I don’t think it’d be correct to say this is not emotional, because all action seems to require emotion, but it’s certainly more about long-term emotions, or something like this anyway.

Now, if you haven’t heard of System 1 and System 2, you’ve probably heard one of its analogues.4 People who say ‘don’t let your amygdala hijack your frontal lobes’, or ‘get out of the sympathetic and into the parasympathetic nervous system’, or ‘something something vagus nerve’ are using pseudo-brain science to get at the same thing. Or, you know ‘be rational’, or ‘think logically’ are the old school versions.

But the thing everyone seems to have taken away from this book is the thing we always take away—System 1 stuff is bad.

This is not what Kahneman was going for.5 Kahneman was trying to show us how both System 1 and System 2 have their place. In the very first section of his book he shows us how System 1 helps us solve 2+2, and work out the source of a noise, and (for literate people) read the text on a warning sign or billboard. As I talk about in more detail elsewhere, we’d be rather unfortunate creatures indeed without this kind of fast, automatic processing.

He then goes on to distinguish heuristics from biases. Heuristics are the basic tool of System 1—mental shortcuts that enable quick thinking. They help us navigate complex situations efficiently so we can save our processing power for more difficult tasks. Biases, on the other hand, are when these heuristics go wrong. When our heuristic leads to us making an error.

This language comes from economics, where they:

work off the assumption that humans are ‘rational actors’. They call it the ‘rational-actor model’. The idea, more-or-less, is that if you give a human a decision to make, they will decide by optimising for their preferences, weighing up the costs and benefits. You do stuff that does the most good for you, and the least bad. On this model, errors should only happen when you don’t have the right information to make the ‘rational’ decision.

…

‘biases’ … are times when we deviate from this model. When we make choices that are not optimised to our preferences, maximising benefit and minimising cost, despite having accurate information.

But, in the language of statistics, both heuristics and biases are bias. It’s all an effort to reduce noise. To make the complexity of the world less complex to process, so we have spare capacity to handle more difficult problems. Sometimes these biases lead to errors, but more often they make us more accurate much faster.

System 1 is a good girl. She’s trying her best.

A sympathetic and parasympathetic alternative

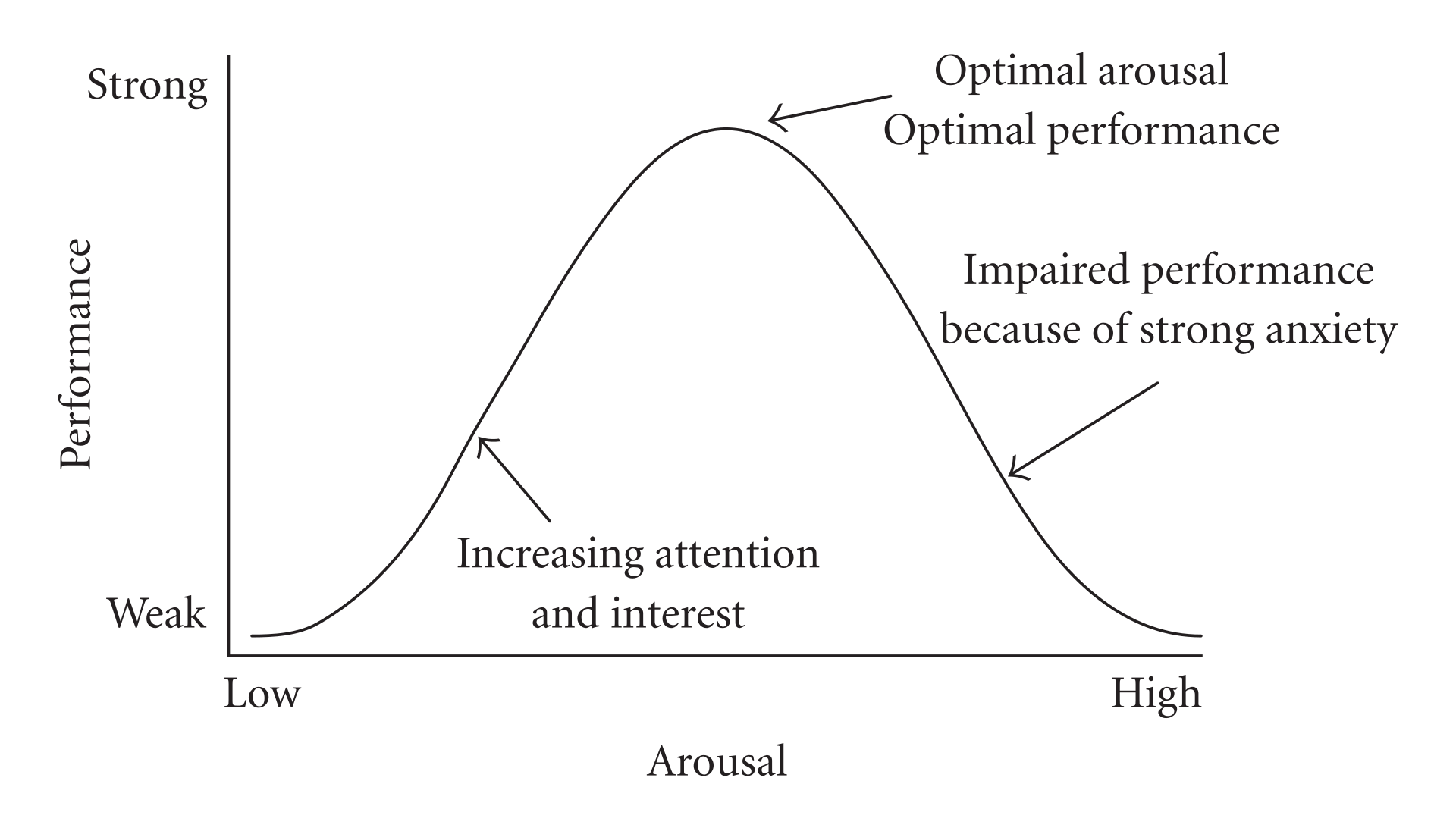

Taking up a couple ideas from the last section, I like to talk a lot about the Yerkes-Dodson Law:

As arousal increases, so does performance. To a point. Eventually, as arousal

continues to increase, performance starts to decrease. Wikipedia.

As arousal increases, so does performance. To a point. Eventually, as arousal

continues to increase, performance starts to decrease. Wikipedia.

As I say in my article on the subject:

What our diagram shows is that as arousal increases, so does our performance in a given task. We need arousal to perform. This kind of anxiety motivates us to perform, and it recruits all those physical and mental resources so important to doing something well.

This is the role of the sympathetic nervous system—to mobilise all the cognitive and physical resources we need to meet the task at hand.

But something I don’t really talk so much about in my article describing how stress is good is the fact that as stress increases, our performance increases, but our cognitive flexibility decreases. More stress makes us rely on more stereotypical behaviour, and less on reward-seeking and exploratory behaviour because we don’t have the bandwidth to think more flexibly.6

In many ways, this could be considered an increase in System 1 (fast) thinking, and a decrease in System 2 (slow) thinking. I write about this in more detail here But, obviously, if you’re stressed, it often makes sense to solve a problem more quickly and with less resources, so we do. This has risks, of course, but being more deliberative would only increase our stress levels. And, as our curve shows us, too much stress and our performance will start to decline.

So we don’t want to get out of the sympathetic and into the parasympathetic, or avoid the System 1 for the System 2. We want to ride the sympathetic, and use the System 1.

Bias beats game theory

Ok, one last random example, since this is becoming a bit of a hodge-podge article. But this one is kind of fun, and short enough that I’ll never make it into its own article.

In the late 70’s, political scientist Robert Axelrod ran a tournament for robots. This was basically a competition based on the prisoner’s dilemma, which:

is a game theory thought experiment involving two rational agents, each of whom can either cooperate for mutual benefit or betray their partner (“defect”) for individual gain.

This is a favourite experiment to run for behavioural economists and frankly all the other flavours of social scientist too.

In Axelrod’s competition, the idea was for various players to create computer programs that would ‘win’ against each other in the dilemma. The bots could take in an opponents action (to cooperate or to defect), and then decide how to respond (by either cooperating or defecting).

The bots could be as complicated as the players liked. You might make a bot that only cooperated when its opponent cooperated a lot, and otherwise defected. Or you might make one that defected all the time, regardless of what its opponent did.

Each bot played hundreds of rounds against each opponent, across a series of different tournaments, but the bot that won every tournament was the ‘tit-for-tat’ bot.

‘Tit-for-tat’ starts off by cooperating on its first turn, and then after that, it does whatever the opponent did last.

Now, ‘Tit-for-tat’ didn’t win every round. Other bots could beat it in a match. But ‘Tit-for-tat’ would always win the most rounds in the tournament.

The tournament went on to be used by game theorists and political scientists to make a fuss about cooperation dynamics, Axelrod himself publishing a book with ‘cooperation’ in the title basically every ten years since.

But what I want to use it for here is to show that, no matter how much deliberation these other bots had coded into them, the very simple, very biased ‘Tit-for-tat’ bot won every tournament.

Bias wins.

Outro

It’s not that complicated. The world is noisy. Bias makes the noise less distracting. Errors are bad, but errors can come from biases that went astray as equally as unbiased calculations that used irrelevant data. On balance, bias is good.

Well, I guess it would actually be a pretty reliable measure of how long since it was last switched on? Mm, like I said. Hard to stretch the metaphor, but you get the point. Random is what I was going for. ↩

Obviously a toy example, but not that far-fetched. There is this famous study on decision fatigue, here researchers analysed cases from Israeli judges and found that judges were significantly more likely to grant parole or favourable rulings immediately after a mealtime break (like lunch) than before the break. And generally, as they progressed through the day and their decision-making resources became depleted, their rulings became harsher. If you happened to look at the wrong aspect of this, you might determine a judge is particularly harsh, when really they were just tired and hungry. You get it, I hope. ↩

And a more beautiful ’romantic friendship’ there was not. ↩

System 1/2 are actually just the most famous example of a lot of literal analogues, not just the metaphorical ones I’m about to describe. The literal analogues are generically termed dual process theories, and make roughly the same point. Automatic, implicit, and unconscious forms of decision-making vs controlled, explicit, and conscious ones. ↩

Well. Not exactly what he was going for. But he does really concentrate a lot on the ‘bias is bad’ side of things. So perhaps we can be forgiven a little for taking away that message. ↩

See e.g. here or here or here or this commentary on this article. But really this is well-known in animal models of behaviour. ↩

Ideologies worth choosing at btrmt.

search

Start typing to search content...

My search finds related ideas, not just keywords.