The trap of scientific evidence

December 18, 2021

Excerpt: There is an odd tension in the journalistic use of ‘no scientific evidence’. No evidence for something is our scientific method telling us that we should accept alternative explanations. Unless of course, we never tested that thing in the first place, in which case our claim of no evidence has no particular meaning at all. But something interesting happens in the middle ground between these two spaces. When scientists must bridge the gap with a funny kind of scientific common sense. The result is often rather lazy.

The scientific claim of ‘no evidence’ both indicates that we have evidence something isn’t true, and that no one has really looked. This fact bears forth the alluring capacity for science to sweep inconvenient truths aside rather than tackle them.

filed under:

Article Status: Complete (for now).

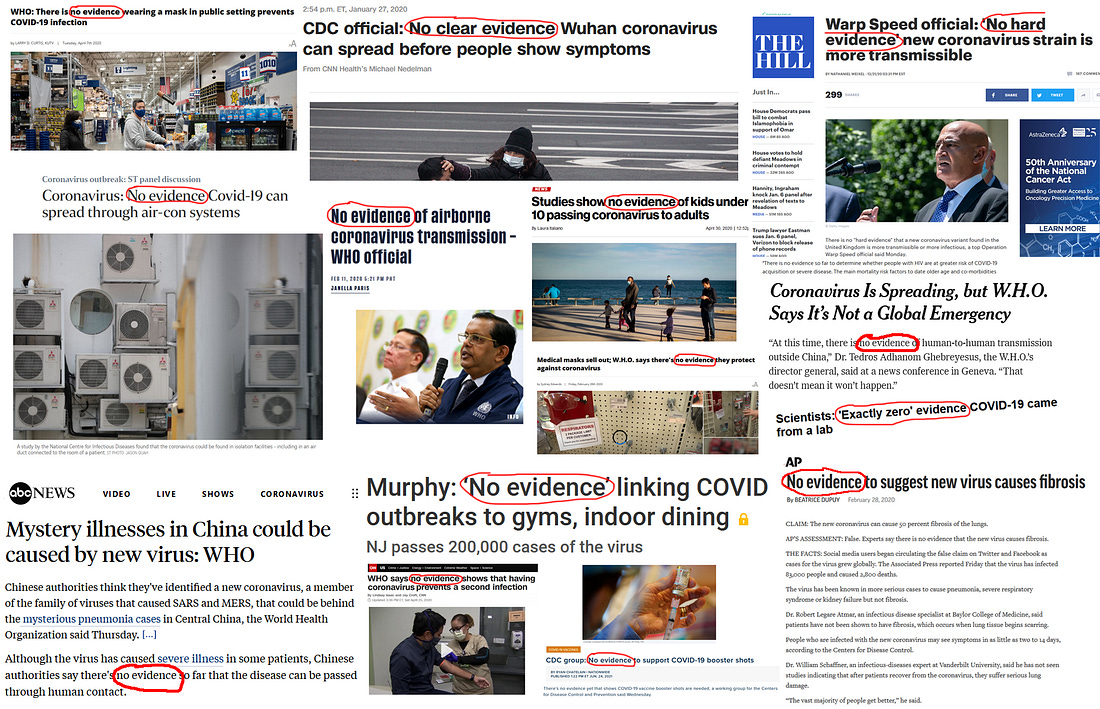

In a recent Astral Codex Ten post, celebrated rationalist psychiatrist Scott Alexander details the odd tension in the journalistic use of ‘no scientific evidence’. First, he displays a series of historic claims from media outlets about the SARS-CoV-2 virus:

Highlights include: "no evidence of human-to-human transmission outside China",

"no evidence wearing a mask in a public setting prevents COVID-19 infection",

and "No evidence of airborne transmission". via Astral Codex Ten.

Highlights include: "no evidence of human-to-human transmission outside China",

"no evidence wearing a mask in a public setting prevents COVID-19 infection",

and "No evidence of airborne transmission". via Astral Codex Ten.

Alexander goes on:

Every single one of these statements that had “no evidence” is currently considered true or at least pretty plausible … these headlines are accurate. Officials were simply describing the then-current state of knowledge … if there hasn’t been a study showing something, then there’s “no evidence” …

He compares these to another couple of recent headlines:

- No Evidence That 45,000 People Died Of Vaccine-Related Complications

- No Evidence Vaccines Cause Miscarriage

and notes:

I don’t think the scientists and journalists involved in these stories meant to shrug and say that no study has ever been done so we can’t be sure either way. I think they meant to express strong confidence these things are false.

The tension in the claim ‘no evidence’

Alexander’s point is that this same term, ‘no evidence’, is used to mean both that:

- despite common sense appeal, there’s no evidence (yet) because we haven’t checked so we’re not sure about it; and

- despite appeal (common sense or no), we have checked and we’re pretty certain that this isn’t a thing.

As Alexander comments, “[t]his is utterly corrosive to anybody trusting science journalism”. True enough.

Somewhat less charitably we might say, as other celebrated rationalist the Zvi did:

This is not an ‘honest’ mistake. This is a systematic anti-epistemic superweapon engineered to control what people are allowed and not allowed to think based on social power, in direct opposition to any and all attempts to actually understand and model the world and know things based on one’s information.

Though, while this is certainly true sometimes, typically, I’m not sure I agree. It more often seems like confusion, or academic laziness.

Really, as Alexander tells us, this is something of a naturalistic outcome of our scientific method. Much science starts with a ‘null hypothesis’: we assume by default there is no effect. Then we test that assumption and if we find there is some effect we ‘reject the null hypothesis’, and assume that, in fact, there is some effect. This is because the core principle of our scientific method is not so much about proving effects, it’s about demonstrating which claims are incorrect.1

Without reading the articles, we can assume that in the case above, “No Evidence That 45,000 People Died Of Vaccine-Related Complications”, the authors investigating would have assumed this wasn’t true—the null hypothesis. They would have tested it and found no evidence that would have caused them to reject the null hypothesis. Thus, there is still no evidence that 45,000 people died of vaccine-related complications. The null hypothesis is not rejected. But here, what that means is that we’re pretty sure that this claim of no evidence is true.

No evidence for something is our scientific method telling us that we should accept alternative explanations. An excellent solution, unless of course, we never tested that thing in the first place, in which case our claim of no evidence has no particular meaning at all.

For example, we could claim that there is no evidence parachute use prevents death when falling from planes as this joke BMJ article did. They point out that “As with many interventions intended to prevent ill health, the effectiveness of parachutes has not been subjected to rigorous evaluation by using randomised controlled trials”.

Their point is that under a paradigm of evidence-based medicine, there is no evidence for the adoption of parachutes. This should not, however, incline us to not use them when jumping out of planes. As we say, absence of evidence is not evidence of absence.

A scientific claim of no evidence only goes so far. And as the Zvi cogently points out:

If someone uses ‘no evidence’ as a synonym for false, as opposed to a synonym for ‘you’re not allowed to claim that’ then this is not merely evidence of bullshit. It is intentionally and knowingly ‘saying that which is not.’

The tension when there is evidence

There is much wrong with our little scientific ritual, beyond that which one could intuit from these kinds of convolutions. But Alexander goes on to highlight another problem with this kind of scientific ambivalence, entirely by accident.

Is there “no evidence” homeopathy works? No, there’s a peer-reviewed study showing that it does. Don’t like it? I have eighty-nine more peer-reviewed studies showing that right here. But a strong theoretical understanding of how water, chemicals, immunology, etc operate suggests homeopathy can’t possibly work, so I assume all those pro-homeopathy studies are methodologically flawed and useless, the same way somewhere between 16% and 89% of other medical studies are flawed and useless. Here we should reject journal articles because they disagree with informal evidence!

Homeopathic treatment has the practitioner dilute the active ingredient of the treatment until the treatment is chemically indistinguishable from the diluent. That is, until the active ingredient is literally gone—you just have two bottles of whatever you were using to dilute with.

Alexander’s point is that, since this is the case, it should be common sense that homeopathy doesn’t do anything and so we should disregard the academic articles that demonstrate that it does.

The logic is something like “the same rational2 common sense that tells us to disregard the science about parachute use should tell us to disregard the science about homeopathy”.

The scientific belief system

This logic doesn’t check out.

The scientific mentality is, as I have pointed out before, a belief system like any other. Indeed, all knowledge falls within a system of belief:

We believe in things and some things that we believe in, we feel so sure about that we call it knowledge.

And all belief systems are prone to error.

The scientific emphasis of secular western culture since at least the enlightment introduces a terribly high barrier to entry on certain kinds of knowledge.

Anything that sits outside the accepted scientific milieu is prone to intense skepticism. Homeopathy, astrology, herbalism, crystal healing, chiropractic therapy, the list goes on.

For some people people, because the scientific milieu rejects them, they become the humorous counter examples of rationalist bloggers.

The scientific belief system has us accepting that we must disregard the science in some cases, but rejecting that notion out of hand on the other. This is the error of the scientific belief system. The same error as any belief system. The bias of people who aren’t thinking very hard.

As I said, this academic laziness seems like it’s more common that the weaponised use-case our earlier, less contented, rationalist the Zvi opined—indeed as he points out in his own, later article.

But all of these phenomena are popular because people claim that they work. For some people these fall into the same category as parachutes—for them, the scientific method has failed these phenomena, but that does not mean they are illegitimate. This opinion is often worth a more tender examination.

Science is a destructive force

And perhaps, even this claim of laziness is unfair. Perhaps it would be more fair to say that the missing link for our scientific enthusiasts is that the theoretical framework that these things are based upon lacks common sense appeal.

Homeopathy is based on a very common theory of medicine throughout time and culture that “like cures like”. From traditional Chinese medicine, to old and new world medicines, to ayerveda. This is reasonable enough to accept. Like often does cure like. And yet, homeopathy then goes on to dilute that very thing into oblivion. In fairness, it does leave one puzzled.

But I don’t think a claim of laziness is typically uncharitable.

Here, we have a fairly cut and dried example of empiricism. On even the looser empirical conception of William James that is closer to Alexander’s point:

a meaningful conception must have some sort of experiential “cash value,” must somehow be capable of being related to some sort of collection of possible empirical observations under specifiable conditions

We don’t simply know that parachutes should work, we observe them at work. Not scientifically, but anecdotally. If it were ethically possible to conduct an experiment in which some jumpers used a parachute and some did not, we would be unsurprised to find that parachutes prevented death better than jumpers with no parachute. This is because we have the experiential cash value and the empirical observations. Five minutes on Red Bull’s youtube channel will provide that free of charge. Alexander is happy with this.

In the case of homeopathy, we are observing that it works both anecdotally and scientifically, but we don’t quite know why. Despite having the experiential cash value and the most rigorous empiricism Alexander is unhappy. The difference is the why.

Granted, some number of these studies truly are likely to be poorly constructed. But this off-hand dismissal smells a little like a case of Alexander stumbling into his own ‘no evidence’ trap. Especially given that we still don’t particularly know how many common drugs work.

Alexander’s point is something along the lines of ‘when science fails us, we should use common sense’3. But I suggest that the common sense here is not that we should simply disregard all ninety of his homeopathic studies, but that perhaps something else is at play beyond the questionable theories of a 200-year old physican.

The fact of the matter is that the scientific method is, by design, destructive. It was developed, in part, as a tool for dissolving the dominant institutions of the time both religious and political.

A tool for refuting claims does not spend a great deal of time building them. Without care, we continue to traverse the road of knowing more and more about less and less.

Perhaps, then, a more fitting criticism of the scientific mentality is that it spends too much time on a certain kind of destructive knowledge, and too little time on those kinds more expansive.

But here we have an opportunity to open ourselves to new ways of knowing. Rather than simply disregarding the fact that, at least for some, phenomena like homeopathy does work because its shape doesn’t fit into the empirical house we have built, we can build a new house.

New ways of knowing

Phenomena like homeopathy do something. Dismissing them out of hand is lazy. It might even be the most lazy thing, because if we were so inclined, we could explain many of these things lazily (or indeed, with great rigour if we preferred).

One obvious solution to the homeopathy problem is to appeal the placebo effect. If you make someone believe that a therapy will work, it often does regardless of whether that therapy is real or fake. This is true more often that we might be comfortable admitting.

Indeed, placebos are notoriously hard to implement. One of the most difficult aspects is stopping participants from breaking blind—that is, realising that they’re in the placebo condition. So we might have a case in which the participants are doubly sure the therapy will work because they can often tell that they’re not in the placebo group. If homeopathy is a placebo, maybe it’s often acting as some kind of super-placebo.

But even if they are completely deceived, it might not matter because placebos seem to work just fine when the participant knows full well that they’re getting a placebo.

Or maybe it’s not a placebo and all, but we could certainly afford to do a little more digging to work it out.

Astrology is an example that benefits from a little digging. Let’s ignore, for now, that the movement of the moon and sun drastically change how water works, and that the sun helps us produce a crucial hormone for physical wellbeing and thus the idea that other cosmic bodies might have some effect on us is not totally insane. Let’s also ignore the fact that astrology has a history that spans possibly the entire history of human civilisation, and these myriad approaches to the discipline make it near impossible to pin down for empirical examination, not to mention the fact that cosmic bodies are quite difficult to wrangle into experimental form.

Let’s simply take the Forer effect as a starting point. When we encounter statements or signs that “hold personal meaning for us, or align to our beliefs and values, we tend to assign it importance and thus ‘validate’ it as true regardless of its actual merit”. When we read a horoscope, we are inclined to see into a mirror that reveals to us things that are important to us. We resonate with the claims that hold power for us, those things with the most influence on our psyche, the kratophanies.

This is far from nothing. This seems quite valuable indeed. Perhaps a horoscope is not predicting our future, but helping us identify what kind of shape we want it to take. With this new understanding in mind, the chances that we will see the opportunities to make it so are illuminated for us.

Even if this is all that astrology does, is it not enough?

Outro

Scientists are often scientists only when it is necessary, or convenient. The rest of the time they are subject to the same frail processes of thought that all humans suffer.

The scientific bias is a destructive one, and often a lazy one. No evidence might indicate evidence in the same breath as it indicates that no one has looked for any. A ‘rational’ common sense that ignores things because it can’t be bothered to figure out why those things might be or is too much indulged in the destruction of things that can’t be.

It is an ideology like any other, making some chaos into order, but leaving some order unexaminined. It is up to us to rescue these things from the void.

Which, it is worth adding, is not to say that the method cannot demonstrate that some explanations are more cogent or reasonable than others. ↩

Or rather, Bayesian, to be precise. ↩

Or, I guess, Bayesian priors? One does wonder if that’s common sense though, given only some small proportion of reading this know what that is.` ↩

Ideologies worth choosing at btrmt.